Primer of Linear Algebra and Matrices

Contents

Primer of Linear Algebra and Matrices¶

Notation¶

\({\mathcal M}(m,n)\) will denote the set of \(m\) by \(n\) matrices. \(A \in {\mathcal M}(m,n)\) has elements \(A_{ij}\), \(i \in \{1, \ldots, m\}\), \(j \in \{1, \ldots, n\}\).

We will identify \({\mathcal M}(1,n)\) and \({\mathcal M}(n,1)\) with \(\Re^n\), in a deliberate abuse of notation. Whether \(x \in \Re^n\) should be considered a column vector (an element of \({\mathcal M}(n,1)\)) or a row vector (an element of \({\mathcal M}(1,n)\)) should be clear from context or will be called out explicitly. For \(a \in {\mathcal M}(1,n)\) or \({\mathcal M}(n,1)\), we generally write only one index, suppressing the index that is always equal to 1 (for instance, we would write \(a_i\) in place of \(a_{1i}\) or \(a_{i1}\), respectively).

It is convenient to write matrices as rectangular arrays, e.g.,

The \(i\)th row of the matrix \(A \in {\mathcal M}(m,n)\) is the matrix \(B \in {\mathcal M}(1,n)\) with elements \(B_j = A_{ij}\), \(j=1, \ldots, n\).

The \(j\)th column of the matrix \(A \in {\mathcal M}(m,n)\), is the matrix \(C \in {\mathcal M}(m,j)\) with elements \(C_i = A_{ij}\), \(i=1, \ldots, m\).

The transpose of a matrix interchanges the row and column indices of the original matrix. If \(A \in {\mathcal M}(m,n)\) then the transpose of \(A\), denoted \(A'\) or \(A^T\), is an element of \({\mathcal M}(m,n)\) (If \(A\) is an \(m\) by \(n\) matrix then \(A'\) is an \(n\) by \(m\) matrix). If \(A\) has elements \(A_{ij}\), then \(A' = A^T\) has elements \(A_{ij}^T = A_{ji}\).

Scalar multiplication. If \(\alpha \in \Re\) and \(A \in {\mathcal M}(m,n)\), the matrix \(\alpha A \in {\mathcal M}(m,n)\) has elements \(\alpha A_{ij}\), \(i \in \{1, \ldots, m\}\), \(j \in \{1, \ldots, n\}\). I.e.,

Let \(-A = -1\cdot A\) be the matrix with elements \((-A)_{ij} = -A_{ij}\):

Matrix addition. If \(A, B \in {\mathcal M}(m,n)\), then the matrix \(A+B \in {\mathcal M}(m,n)\) has elements \(A_{ij}+B_{ij}\), \(i \in \{1, \ldots, m\}\), \(j \in \{1, \ldots, n\}\):

Let \(0_{mn} \in {\mathcal M}(m,n)\) denote the \(m\) by \(n\) matrix all of whose elements are zero; i.e., \((0_{mn})_{ij} = 0\), \(i \in \{1, \ldots, m\}\), \(j \in \{1, \ldots, n\}\):

With these definitions, \({\mathcal M}(m,n)\) is a linear vector space over the reals, with scalar identity element \(1\) and vector identity element \(0_{mn}\).

Transposition. The transpose of \(A \in {\mathcal M}(m,n)\), \(A^T = A' \in {\mathcal M}(n,m)\) has elements \((A')_{ij} = A_{ji}\), \(i \in \{1, \ldots, n\}\), \(j \in \{1, \ldots, m\}\):

If \(A = A'\), then \(A\) is symmetric.

Let \({\mathbf 1}_{n} \in {\mathcal M}(n,n)\) denote the \(n\) by \(n\) identity matrix with elements \((1_{n})_{ij} = 1\), \(i=j \in \{1, \ldots, n\}\), and \((1_{n})_{ij} = 0\), \(i \ne j\):

The identity matrix is an example of a diagonal matrix. A matrix \(A\) is diagonal if \(A_{ij}=0\) whenever \(i \ne j\).

Matrix multiplication. If \(A \in {\mathcal M}(m,n)\) and \(B \in {\mathcal M}(n,k)\), then \(AB \in {\mathcal M}(m,k)\) is the matrix with elements \((AB)_{ij} = \sum_{\ell=1}^m A_{i \ell}B_{\ell j}\):

If \(A \in {\mathcal M}(m,n)\), then \(A {\mathbf 1}_{n} = {\mathbf 1}_{m} A = A\).

A matrix \(A\) is square if it has the same number of rows and columns, that is, if \(A \in {\mathcal M}(n,n)\) for some \(n\).

Inverses. Given a square matrix \(A\in {\mathcal M}(n,n)\), \(A^{-1}\) is the inverse of \(A\) if \(A^{-1}A = {\mathbf 1}_n\).

Not every square matrix has an inverse; the zero matrix \({\mathbf 0}_n\) is an example. If \(A\) has an inverse, \(A\) is invertible. A square matrix that is not invertible is singular.

Properties of inverses:

If \(A\) has an inverse, the inverse is unique

\(AA^{-1} = A^{-1}A = {\mathbf 1}_n\).

\((A^{-1})^{-1} = A\).

\((A')^{-1} = (A^{-1})'\).

If \(A\) is invertible, \((\alpha A)^{-1} = \alpha^{-1} A^{-1}\) for all nonzero \(\alpha \in \Re\).

If \(A\) and \(B\) are invertible and have the same dimension, \((AB)^{-1} = B^{-1}A^{-1}\).

Linear Independence and Rank. Let \(\{a_j \} \in \Re^n\) be a collection of vectors. Recall that \(\{a_j \}\) are linearly independent if \(\sum_j \alpha_j a_j = {\mathbf 0}_n\) implies that \(\alpha_j = 0\) for all \(j\).

The row rank of a matrix \(A \in {\mathcal M}(m,n)\) is the maximal number of linearly independent rows it has; equivalently, it is the dimension of the subspace of \(\Re^m\) spanned by the rows of \(A\). The column rank of \(A\) is to the maximal number of linearly independent columns it has; equivalently, it is the dimension of the subspace of \(\Re^n\) spanned by the columns of \(A\). The row rank and the column rank if any matrix are equal; we denote them \({\mbox{rank}}(A)\).

If \(A \in {\mathcal M}(m,n)\) then \({\mbox{rank}}(A) \le \min(m, n)\).

If \(A\) and \(B\) can be multiplied, \({\mbox{rank}}(AB) \le \min({\mbox{rank}}(A), {\mbox{rank}}(B))\).

If \(A\) and \(B\) have the same dimensions, \({\mbox{rank}}(A+B) \le {\mbox{rank}}(A) + {\mbox{rank}}(B)\).

A square matrix \(A \in {\mathcal M}(n,n)\) is invertible if and only if \({\mbox{rank}}(A) = n\).

Definitions¶

Let \(A \in {\mathcal M}(n,n)\).

If \(A^{-1} = A'\), then \(A\) is orthogonal.

If \(A_{ij} = 0\) for all \(i \ne j\), \(A\) is diagonal.

If \(x'Ax \ge 0\) for all \(x \in \Re^n\), \(A\) is nonnegative definite (or positive semi-definite)

If \(x'Ax > 0\) for all \(x \in \Re^n\) except \(x = {\mathbf 0}\), \(A\) is positive definite

\(A\) has full rank if \({\mbox{rank}}(A) = n\). Otherwise, \(A\) is rank deficient.

Exercises¶

Show that if \(A \in {\mathcal M}(m,n)\) and \(B \in {\mathcal M}(n,p)\) then \((AB)' = B'A'\).

Show that the identity matrix is its own inverse.

Show that if \(A\) is invertible, its inverse is unique (a matrix has at most one inverse).

Show that if \(A\) is invertible, then \(A^{-1}A = AA^{-1}\).

Show that if \(A\) and \(B\) are both invertible matrices with the same dimensions, then \((AB)^{-1} = B^{-1}A^{-1}\).

Show that one row of a square matrix \(A\) is a multiple of another row of \(A\), then \(A\) is rank deficient.

Show that if a square matrix is rank deficient, it is not invertible.

Show that if \(A\) is a diagonal matrix with strictly positive diagonal elements, then \(A\) is positive definite.

Show that the row rank of a matrix is equal to its column rank.

Dot (inner) Products and the Euclidean norm.

If \(a, b \in \Re^n\) then the dot product of \(a\) and \(b\) is

If \(a \cdot b = 0\), we say \(a\) and \(b\) are orthogonal, and we write \(a \perp b\).

The Euclidean norm or 2-norm of \(a\) is

(The subscript 2 is often omitted for the 2-norm.)

For \(1 \le p < \infty\), the \(p\)-norm of \(a\) is

The infinity norm of \(a\) is

Outer Products.

If \(a, b \in \Re^n\) then the outer product of \(a\) and \(b\) is the matrix \(ab \in {\mathcal M}(n,n)\) with elements \((ab)_{ij} = a_ib_j\):

Exercises¶

Show that if \(R \in {\mathcal M}(n,n)\) is an orthogonal matrix, then for all \(x \in \Re^n\), \(\|Rx\|_2 = \|x\|_2\).

Show that if \(G\in {\mathcal M}(n,n) \) is positive definite, then \(\| \cdot \|_G\) is a norm on \(\Re^n\), where \(\|x\|_G \equiv \sqrt{x'Gx}\).

Show that the dot product is an inner product (over the real numbers) on \(\Re^n\).

Show that the 2-norm \( \|a \| \equiv \sqrt{a \cdot a}\) is a norm on \(\Re^n\).

Show that the 1-norm \(\|a\|_1 \equiv \sum_{i=1}^n | a_{i1} |\) is a norm on \(\Re^n\).

Show that \(\lim_{p \rightarrow \infty} \|a\|_p = \|a\|_\infty\).

R interlude¶

Let’s look at all these entities and operations in R.

# Create a matrix

A <- 1:12; # A is a 1-dimensional array of the integers 1-12

dim(A) <- c(4,3); # Change the dimension of A to be 4 by 3

print("A")

A # the matrix A, which is 4 by 3

# Another way to do the same thing

A <- matrix(

+ c(1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12), # data

nrow=4, # number of rows

ncol=3, # number of columns

byrow = FALSE) ; # read the data by column

A

# Another way

A <- matrix(

seq(1,12), # data

nrow=4, # number of rows

ncol=3, # number of columns

byrow = FALSE) ; # read the data by column

A

[1] "A"

| 1 | 5 | 9 |

| 2 | 6 | 10 |

| 3 | 7 | 11 |

| 4 | 8 | 12 |

| 1 | 5 | 9 |

| 2 | 6 | 10 |

| 3 | 7 | 11 |

| 4 | 8 | 12 |

| 1 | 5 | 9 |

| 2 | 6 | 10 |

| 3 | 7 | 11 |

| 4 | 8 | 12 |

# Transpose A

print("Transpose of A")

t(A) # the transpose of A, which is 3 by 4

A <- t(A); # overwrite A with its transpose: A is now a 3 by 4 array

A

# Another way to make this matrix

A <- matrix(

seq(1,12), # data

nrow=3, # number of rows

ncol=4, # number of columns

byrow = TRUE) ; # read the data by row

A

[1] "Transpose of A"

| 1 | 2 | 3 | 4 |

| 5 | 6 | 7 | 8 |

| 9 | 10 | 11 | 12 |

| 1 | 2 | 3 | 4 |

| 5 | 6 | 7 | 8 |

| 9 | 10 | 11 | 12 |

| 1 | 2 | 3 | 4 |

| 5 | 6 | 7 | 8 |

| 9 | 10 | 11 | 12 |

# Scalar multiplication

alpha <- 3;

A

alpha*A

| 1 | 2 | 3 | 4 |

| 5 | 6 | 7 | 8 |

| 9 | 10 | 11 | 12 |

| 3 | 6 | 9 | 12 |

| 15 | 18 | 21 | 24 |

| 27 | 30 | 33 | 36 |

# R understands matrix negation

-A

-1*A

| -1 | -2 | -3 | -4 |

| -5 | -6 | -7 | -8 |

| -9 | -10 | -11 | -12 |

| -1 | -2 | -3 | -4 |

| -5 | -6 | -7 | -8 |

| -9 | -10 | -11 | -12 |

# in R, you can create an m by n zero matrix as follows:

Z <- matrix(0, 3, 4);

Z

A + Z

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 |

| 1 | 2 | 3 | 4 |

| 5 | 6 | 7 | 8 |

| 9 | 10 | 11 | 12 |

# To create an n by n identity matrix in R, make a diagonal matrix of ones:

I = diag(1,3) # a diagonal matrix of 1s, with dimension 3 by 3

I

I2 = diag(c(1,1,1)) # a diagonal matrix with the vector (1,1,1) along the diagonal

I2

| 1 | 0 | 0 |

| 0 | 1 | 0 |

| 0 | 0 | 1 |

| 1 | 0 | 0 |

| 0 | 1 | 0 |

| 0 | 0 | 1 |

# R supports scalar addition; this is not a formal operation in linear algebra

alpha + A;

| 4 | 5 | 6 | 7 |

| 8 | 9 | 10 | 11 |

| 12 | 13 | 14 | 15 |

# Matrix addition

B <- 24:13; # B is an array with the integers 24 to 13 in descending order

dim(B) = c(4,3); # B is now a 4 by 3 array

B <- t(B); # B is now a 3 by 4 array

A # 1:12

B # 24:13

A+B # a 3 by 4 array; all entries are 25

| 1 | 2 | 3 | 4 |

| 5 | 6 | 7 | 8 |

| 9 | 10 | 11 | 12 |

| 24 | 23 | 22 | 21 |

| 20 | 19 | 18 | 17 |

| 16 | 15 | 14 | 13 |

| 25 | 25 | 25 | 25 |

| 25 | 25 | 25 | 25 |

| 25 | 25 | 25 | 25 |

# Matrix multiplication

C <- 1:4;

C

A%*%C # a 3 by 2 matrix

cat("the (3,1) entry should be", 9*1+10*2+11*3+12*4) # Check the (3,1) entry

- 1

- 2

- 3

- 4

| 30 |

| 70 |

| 110 |

the (3,1) entry should be 110

# now multiplying a matrix by a matrix The matrix multiplication operator is %*%

A %*% t(B) # a 3 by 3 matrix

t(A) %*% B # a 4 by 4 matrix

| 220 | 180 | 140 |

| 580 | 476 | 372 |

| 940 | 772 | 604 |

| 268 | 253 | 238 | 223 |

| 328 | 310 | 292 | 274 |

| 388 | 367 | 346 | 325 |

| 448 | 424 | 400 | 376 |

# Multiplication by the identity matrix

I3 <- diag(1,3); # I3 is the 3 by 3 identity matrix

I3 %*% A # multiplying from the left by the 3 by 3 identity matrix gives us back A

#

I4 <- diag(1,4); # I4 is the 4 by 4 identity matrix

A %*% I4 # multiplying from the right by the 4 by 4 identity matrix gives us back A

| 1 | 2 | 3 | 4 |

| 5 | 6 | 7 | 8 |

| 9 | 10 | 11 | 12 |

| 1 | 2 | 3 | 4 |

| 5 | 6 | 7 | 8 |

| 9 | 10 | 11 | 12 |

# R also does elementwise multiplication; again, this is not a formal operation in linear algebra

A*C # R copies C repeatedly to fill out the dimension of A,

# then multiplies elements of A by the corresponding element

| 1 | 8 | 9 | 8 |

| 10 | 6 | 28 | 24 |

| 27 | 20 | 11 | 48 |

# The dot product

a <- 1:5;

b <- c(rep(1,3), rep(2,2)); # new function: define a vector by repeating values

b

t(a) %*% b

# another way to do it

sum(a*b)

- 1

- 1

- 1

- 2

- 2

| 24 |

# the 2-norm

norm(as.matrix(a), type="2") # the "norm" function operates on matrices--not lists.

# The function "as.matrix()" turns the list a into a matrix.

# alternative 1

sqrt(sum(a*a))

# alternative 2

sqrt(t(a) %*% a)

# check:

sqrt(1^2+2^2+3^2+4^2+5^2)

| 7.416198 |

# the 1-norm

norm(as.matrix(a), type="o")

# alternative

sum(abs(a))

# check:

1+2+3+4+5

Interlude: a bit more computation and R¶

The notation \(X \sim P\) means that the random variable \(X\) has the probability distribution \(P\). Recall that \(U[a, b]\) denotes the uniform distribution on the interval \([a, b]\).

Recall that the R function runif() generates uniformly distributed pseudo-random numbers between 0 and 1. To generate pseudo-random numbers that are uniformly distributed on the interval \([a, b]\), we can re-scale numbers from the interval \([0,1]\) as follows:

If \(X \sim U[0,1]\), then \(a+(b-a)X \sim U[a,b]\).

Example: to generate pseudo-random numbers on the interval \([-1, 2]\), we can generate pseudo-random numbers on the interval \([0,1]\), multiply them by 3, and subtract 1 from the result.

The R function runif() takes options min and max to set the range in this way.

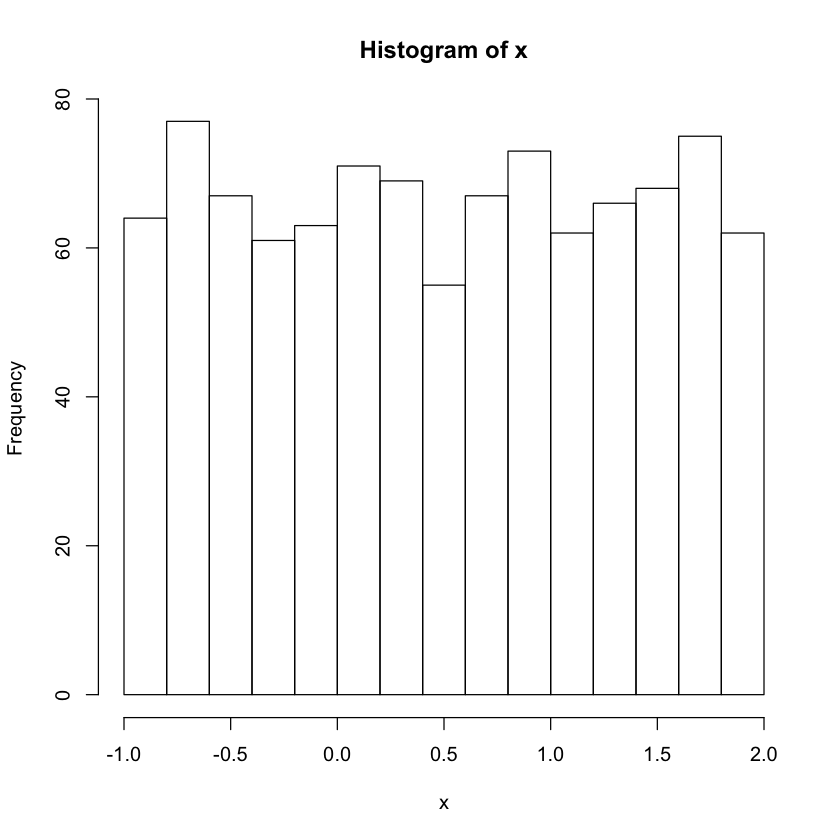

# Here's how to plot a histogram in R. You will need this for the exercise below.

x <- runif(1000, min=-1, max=2) # generate pseudorandom numbers uniformly distributed between -1 and 2.

hist(x) # plot a histogram of the results

Exercises¶

Write an R script that computes the \(p\)-norm for general \(p\), two different ways, from scratch. Do not use the “norm” function.

Let \(A\) be the 2 by 2 rotation matrix:

- Prove algebraically that $A(\theta)$ is an orthogonal matrix.

- Write an R script that checks whether $A(\theta)$ is orthogonal by computing $A(\theta)A'(\theta)$ numerically for 1,000 values of $\theta$ selected at random, uniformly from $[0, 2\pi]$:

- For each randomly selected value of $\theta$, calculate $\sum_{i=1}^2 \sum_{j=1}^2 \left ( (A(\theta)A'(\theta))_{ij} - ({\mathbf 1}_2)_{ij} \right )^2$. (Note: this is the square of the Frobenius matrix norm of the difference between $AA'$ and $\mathbf 1$. You can code it from scratch or use the R function <tt>norm()</tt> by setting <tt>type="F"</tt> in the call to <tt>norm</tt>.)

- Find the maximum value of those 1,000 sums of squares.

- Plot a histogram of those 1,000 sums of squares.

Trace, Determinant, Inverse¶

Trace¶

If \(A \in {\mathcal M}(n,n)\), the trace of \(A\) is the sum of the diagonal elements of \(A\):

Determinants¶

The determinant of a square matrix is a real number; how to compute the determinant of a matrix is described below.

Properties of determinants:

\(|A| = |A'|\).

If \(A\) and \(B\) can be multiplied, \(|AB| = |A|\cdot |B|\).

\(|{\mathbf 1}_n| = 1\).

If \(A\) is invertible, \(|A^{-1}| = |A|^{-1}\).

\(A\) is invertible if and only if its determinant is nonzero.

Computing the determinant¶

The determinant of a matrix \(A \in {\mathcal M}(2,2)\) is

The determinant of a matrix \(A \in {\mathcal M}(3,3)\) is

Let \(S_n\) be the set of all permutations of \({1, \ldots, n}\). Define the signature of the permutation \(\pi \in S_n\), \(\mbox{sgn}(\pi)\) to be \(+1\) if \(\pi\) can be derived from \((1, \ldots, n)\) by interchanging an even number of pairs of elements and \(-1\) if \(\pi\) can be derived from \((1, \ldots, n)\) by interchanging an even number of pairs of elements.

E.g., for \(n=4\), \(\mbox{sgn}(1,3,2,4) = -1\), since this permutation can be derived from \((1,2,3,4)\) by swapping \(3\) and \(2\).

The determinant of a matrix \(A \in {\mathcal M}(n,n)\) is then given by

Inverses¶

If \(A \in {\mathcal M}(n,n)\) is invertible, its inverse is

where \(\mbox{adj}(A)\) is the adjugate of \(A\), the transpose of the matrix of co-factors. The \((i,j)\) element of the co-factor matrix of \(A\) is the determinant of the matrix that results from deleting the \(i\)th row and \(j\)th column of \(A\), multiplied by \((-1)^{i+j}\).

For instance, if \(A \in {\mathcal M}(2,2)\), its adjugate is

If \(A \in {\mathcal M}(3,3)\), its adjugate is

Examples of computing inverses¶

Let

Then

and \(|A| = 1\cdot7 - 6\cdot2 = 7-12 = -5 \ne 0\), so \(A\) is invertible.

Let’s check the result:

Let’s compute another: take

Then

Hence, \(A\) is not full rank and is not invertible. (The second row is a multiple of the first row, so the rows are linearly dependent.)

# Trace in R

A <- array(1:5,dim=c(5,5))

A

sum(diag(A)) # diag() extracts the diagonal of a matrix; sum() adds the elements of a list

| 1 | 1 | 1 | 1 | 1 |

| 2 | 2 | 2 | 2 | 2 |

| 3 | 3 | 3 | 3 | 3 |

| 4 | 4 | 4 | 4 | 4 |

| 5 | 5 | 5 | 5 | 5 |

# Determinant

A <- array(1:5,dim=c(5,5))

A # the rows and columns are multiples of each other, so A is singular (not invertible)

det(A) # 0

# The set of singular matrices has Lebesgue measure 0, so a random matrix is nonsingular with probability 1

A <- array(runif(25), dim=c(5,5)) # 5 by 5 matrix with uniformly distributed elements

A

det(A)

| 1 | 1 | 1 | 1 | 1 |

| 2 | 2 | 2 | 2 | 2 |

| 3 | 3 | 3 | 3 | 3 |

| 4 | 4 | 4 | 4 | 4 |

| 5 | 5 | 5 | 5 | 5 |

| 0.44494921 | 0.52317376 | 0.03632915 | 0.32502890 | 0.11407382 |

| 0.1517755 | 0.8576347 | 0.6506324 | 0.7805578 | 0.1062984 |

| 0.7462715 | 0.4619980 | 0.1625752 | 0.6339368 | 0.6016028 |

| 0.60668472 | 0.64195808 | 0.09207884 | 0.71420528 | 0.62334836 |

| 0.79192360 | 0.03372087 | 0.35195751 | 0.07350370 | 0.58952493 |

# Inverse

solve(A) # this is almost never the right thing to do

| 1.6532796 | -0.3517214 | 3.0738478 | -3.0826031 | -0.1338509 |

| 1.86271860 | 0.08329378 | -5.52354965 | 3.84048152 | 1.20043061 |

| -0.7561739 | 1.1796194 | -0.2336376 | -0.8476521 | 1.0683307 |

| -1.5079462 | 0.3122524 | 6.2728416 | -3.5774260 | -2.3831924 |

| -1.6879742 | -0.2754759 | -4.4558594 | 4.8733703 | 1.4667515 |

Eigenvalue / Eigenvector decompositions¶

Let \(A \in {\mathcal M}(n,n)\) be a square matrix. An eigenvector of \(A\) is a non-zero vector \(v \in \Re^n\) such that, for some scalar \(\lambda \in \Re\), \(\lambda \ne 0\), \(Av = \lambda v\). That is, the effect of multiplying \(v\) by the matrix \(A\) is to “stretch or shrink” \(v\) by the scalar \(\lambda\), without changing the direction of \(v\) (except perhaps to change its sign). The scalar \(\lambda\) is the eigenvalue associated with the eigenvector \(v\).

If \(A\) is a diagonal matrix, its eigenvectors are the coordinate vectors \(e_1 = (1, 0, \ldots, 0)\), \(e_2 = (0, 1, \ldots, 0)\), \(e_n = (0, 0, \ldots, 1)\), and the associated eigenvalues are the diagonal elements of \(A\). That is, the eigenvalue associated with the eigenvector \(e_j\) is \(A_{jj}\).

If \(Av = \lambda v\), then \((A- \lambda {\mathbf 1}_n)v = 0\). For that to occur with \(v \ne {\mathbf 0}_n\), it is necessary that the determinant of \(A - \lambda {\mathbf 1}_n\) be zero. That condition, \(\left |A - \lambda {\mathbf 1}_n \right | = 0\) means that any eigenvalue must be a root of an \(n\)th degree polynomial in \(\lambda\) called the characteristic polynomial of the matrix \(A\). (The leading term in the polynomial is \((-1)^n \lambda^n\).) It follows that \(A\) has at most \(n\) real eigenvalues and always has \(n\) complex eigenvalues (counting multiple roots as often as they occur).

Suppose that \(A\) has the \(n\) complex eigenvalues \(\{\lambda_i \}\) (counting multiples as often as they occur).

Then:

\(\mbox{trace}(A) = \sum_{i=1}^n \lambda_i\): the trace of \(A\) is the sum of its eigenvalues.

\(\left | A \right | = \prod_{i=1}^n \lambda_i\): the determinant of \(A\) is the product of its eigenvalues.

The eigenvalues of \(A^k\) are \(\{\lambda_i^k\}_{k=1}^n\).

If all \(\{\lambda_i\}\) are real and non-negative, \(A\) is positive semi-definite.

If all \(\{\lambda_i\}\) are real and positive, then \(A\) is positive definite.

If all \(\{\lambda_i\}\) are real and nonzero, then \(A\) is invertible, and the eigenvalues of \(A^{-1}\) are \(\{\lambda_i^{-1}\}\).

Let \(V \in {\mathcal M}(n,n)\) be the matrix whose columns are the eigenvectors of a matrix \(A\), and let \(\Lambda\) be the diagonal matrix of the corresponding eigenvalues of \(A\). Then \(A = V \Lambda V^{-1}\). This factorization is the eigen-decomposition of \(A\).

Suppose \(A\) is positive semi-definite. Then \(A\) can be written

where \(R\) is an orthogonal matrix and \(\Lambda\) is the diagonal matrix whose diagonal elements are the eigenvalues of \(A\), all of which are non-negative. The columns of \(R\) are the eigenvectors of \(A\). If \(A\) is positive definite, then the diagonal elements of \(\Lambda\) are strictly positive.

Matrix square-roots¶

Suppose that \(\Lambda\) is a diagonal matrix with all non-negative elements.

Define the matrix \(\Lambda^{1/2}\) by \((\Lambda^{1/2})_{ij} = \sqrt{\Lambda_{ij}}\):

Then \(\Lambda^{1/2}\) is the matrix square root of \(\Lambda\), in the sense that \(\Lambda^{1/2}\Lambda^{1/2} = \Lambda\).

Suppose \(A \in {\mathcal M}(n,n)\) is positive semi-definite, with the eigen-decomposition \(R\Lambda R'\), \(R\) orthogonal, \(\Lambda\) diagonal with non-negative elements. Define the matrix

Then \(A^{1/2}\) is the matrix square root of \(A\), in the sense that \(A^{1/2}A^{1/2} = A\).

Inverses of Positive-Definite Matrices¶

Suppose that \(\Lambda \in {\mathcal M}(n,n)\) is a diagonal matrix with strictly positive diagonal elements (i.e., \(\Lambda\) is a diagonal, positive definite matrix). Then the inverse of \(\Lambda\) has elements

We can verify this by direct multiplication:

Suppose that \(A \in {\mathcal M}(n,n)\) is positive definite, with the eigen-decomposition \(R\Lambda R'\). Then \(A^{-1} = R \Lambda^{-1} R'\).

Exercises¶

Show that if \(\Lambda \in {\mathcal M}(n,n)\) is a diagonal matrix with non-negative elements, then the matrix with elements \((\Lambda^{1/2})_{ij} = \sqrt{\Lambda_{ij}}\) is its matrix square root.

Show that if \(A \in {\mathcal M}(n,n)\) is positive definite with the eigen-decomposition \(R\Lambda R'\), then \(A^{1/2} \equiv R\Lambda^{1/2}R'\) is the matrix square root of \(A\).

# Eigen decompositions in R

A <- array(c(1,1,1,1,2,3,0,1,1), dim=c(3,3));

eigen(A)

# eigen() is an example of a function that returns more than one variable. Here's how you access them:

decomp <- eigen(A);

decomp$values

decomp$vectors

- $values

- 3.65109340893717

- 0.726109445035784

- -0.377202853972958

- $vectors

-0.2268408 -0.8167856 0.3520763 -0.6013762 0.2237099 -0.4848805 -0.7660874 0.5318037 0.8005830

- 3.65109340893717

- 0.726109445035784

- -0.377202853972958

| -0.2268408 | -0.8167856 | 0.3520763 |

| -0.6013762 | 0.2237099 | -0.4848805 |

| -0.7660874 | 0.5318037 | 0.8005830 |

# let's check whether these really are eigenvectors!

ev1 <- decomp$vectors[,1]; # the first column of the eigenvector matrix: an eigenvector

ev1

A %*% ev1 / decomp$values[1] # this should give us back the first eigenvector

- -0.2268408088325

- -0.601376173173819

- -0.766087426986653

| -0.2268408 |

| -0.6013762 |

| -0.7660874 |

# check that the eigen-decomposition of A actually holds

A

decomp$vectors %*% diag(decomp$values) %*% solve(decomp$vectors)

| 1 | 1 | 0 |

| 1 | 2 | 1 |

| 1 | 3 | 1 |

| 1.000000e+00 | 1.000000e+00 | -5.551115e-16 |

| 1 | 2 | 1 |

| 1 | 3 | 1 |

# check that the sum of the eigenvalues is the trace

sum(diag(A))

sum(decomp$values)

# check that the product of the eigenvalues is the determinant

det(A)

prod(decomp$values)

# check whether we can invert A using its eigen-decomposition

Ainv <- decomp$vectors %*% diag(1/decomp$values) %*% solve(decomp$vectors);

Ainv

A %*% Ainv # this should be the identity matrix

| 1 | 1 | -1 |

| 1.026956e-15 | -1.000000e+00 | 1.000000e+00 |

| -1 | 2 | -1 |

| 1.000000e+00 | -6.661338e-16 | 1.221245e-15 |

| -1.110223e-16 | 1.000000e+00 | -4.440892e-16 |

| 8.881784e-16 | 1.110223e-15 | 1.000000e+00 |

# this matrix is not positive-definite, so the eigenvector matrix is not orthogonal: its

# transpose is not its inverse. Let's verify that:

t(decomp$vectors)

solve(decomp$vectors)

| -0.2268408 | -0.6013762 | -0.7660874 |

| -0.8167856 | 0.2237099 | 0.5318037 |

| 0.3520763 | -0.4848805 | 0.8005830 |

| -0.5152664 | -0.9918796 | -0.3741398 |

| -1.0057615 | -0.1039075 | 0.3793761 |

| 0.1750332 | -0.8801187 | 0.6390625 |

# Now let's try it with a positive-definite matrix. We can make a positive definite matrix from a nonsingular matrix

Apd <- t(A) %*% A;

Apd

decomp <- eigen(Apd);

decomp$values # the eigenvalues should be non-negative

decomp$vectors %*% t(decomp$vectors) # and the matrix of eigenvectors should be orthogonal

| 3 | 6 | 2 |

| 6 | 14 | 5 |

| 2 | 5 | 2 |

- 18.4052979838975

- 0.481973433502735

- 0.11272858259973

| 1.000000e+00 | -1.110223e-16 | -1.665335e-16 |

| -1.110223e-16 | 1.000000e+00 | -5.551115e-17 |

| -1.387779e-16 | -5.551115e-17 | 1.000000e+00 |

# the eigenvector matrix should be orthogonal. Let's check:

t(decomp$vectors)

solve(decomp$vectors) # should be equal to the transpose

| 0.3797058 | 0.8709960 | 0.3117524 |

| 0.8226923 | -0.1638011 | -0.5443773 |

| 0.4230850 | -0.4631795 | 0.7787579 |

| 0.3797058 | 0.8709960 | 0.3117524 |

| 0.8226923 | -0.1638011 | -0.5443773 |

| 0.4230850 | -0.4631795 | 0.7787579 |

# lets make the matrix square root of Apd

ApdHalf <- decomp$vectors %*% diag(sqrt(decomp$values)) %*% t(decomp$vectors);

ApdHalf

ApdHalf %*% ApdHalf

| 1.148516 | 1.259494 | 0.307545 |

| 1.259494 | 3.345303 | 1.105722 |

| 0.3075450 | 1.1057220 | 0.8263141 |

| 3 | 6 | 2 |

| 6 | 14 | 5 |

| 2 | 5 | 2 |

Solving Linear Systems¶

We will often need to solve linear equations of the form \(Ax = b\), where \(A \in {\mathcal M}(m,n)\) and \(b \in {\mathcal M}(1,m)\) are known, and \(x \in {\mathcal M}(n,1)\) is the unknown vector we seek.

If the rows of \(A\) are linearly independent and \(n \ge m\), there is at least one solution. If \(n < m\), there may be no solution.

For the moment, suppose \(A\) is an invertible square matrix with dimension \(n\). Then, formally, we can find \(x\) by multiplying both sides by the inverse of \(A\):

Since \(A^{-1}A = {\mathbf 1}_n\), this becomes

However, this is not a good way to find \(x\): it is unnecessarily numerically unstable (ill conditioned) compared with other ways of solving the linear system.

Better methods include matrix factorizations or decompositions (LU, QR, Cholesky), Gaussian elimination, etc. We won’t study those methods in detail, but will present Gaussian elimination briefly.

Gaussian elimination. Gaussian elimination is a method that can be used to find the rank of a matrix and to solve a linear system. Gaussian elimination involves three operations:

swap two rows

multiply a row by a nonzero scalar

add a scalar times one row to another row

All of those operations preserves the linear system: a vector \(x\) solves the original system if and only if it solves the transformed system.

The goal of Gaussian elimination is to use those operations to transform \(A\) into upper triangular form, in which all elements “below” the diagonal are zero. More specifically, it transforms to row eschelon form, in which the first non-zero element of each row is to the right of the first non-zero element of the row above it. It is trivial to solve a linear system (using back substitution) once it is in upper-triangular form, as we shall see.

Here’s an illustration. Let

and

We start by forming the “augmented” matrix that consists of appending \(b\) as an additional column of \(A\):

Divide the first row by 2

Subtract 6 times the first row from the second row:

Subtract three times the first row from the third row:

Multiply the second and third rows by -1 and swap them:

Divide the second row by 2:

Subtract 11 times the second row from the third row:

Multiply the last row by -1/9.5:

We now use back substitution to find \(x\):

\(x_3 = 0.63158\).

\(x_2 + 1.5\times 0.63158 = 1\), so \(x_2 = 0.05263\).

\(x_1 + 2\times 0.05263 + 2\times0.63158 = 1\), so \(x_1 = -0.36842\).

This gives

Multiplying by \(A\) gives

# Let's try this in R

A <- array(c(2,6,3,4,1,4,4,5,3), dim=c(3,3));

A

b <- c(2,1,1);

b

solve(A,b)

| 2 | 4 | 4 |

| 6 | 1 | 5 |

| 3 | 4 | 3 |

- 2

- 1

- 1

- -0.368421052631579

- 0.0526315789473685

- 0.631578947368421

Gaussian elimination, continued.¶

What happens if \(A\) is rank deficient?

Consider the matrix

Let’s perform Gaussian elimination on it. Divide the first row by 2

Subtract 6 times the first row from the second row:

Subtract ten times the first row from the third row:

Subtract the 2nd row from the third row:

The third row is now zero: there are only two nonzero rows once we transform to upper-triangular form. The rank of the matrix \(A\) is therefore 2, not 3 (it is rank deficient). This matrix \(A\) is not invertible.

# solve a linear system using R

A <- array(runif(25), dim=c(5,5)) # 5 by 5 matrix with uniformly distributed elements

b <- rep(1,5);

x <- solve(A,b);

x

A %*% x # check that x solves the linear system

- 3.28678678551627

- 0.31387097276445

- -3.02500161149644

- 1.36130631294013

- 0.650061555869014

| 1 |

| 1 |

| 1 |

| 1 |

| 1 |

Ainv = solve(A);

Ainv

x <- Ainv %*% b;

x

A %*% x

| 4.992164 | 2.381509 | 5.862320 | -6.310363 | -3.638844 |

| 0.282778 | -1.400723 | -1.985402 | 1.730754 | 1.686464 |

| -4.7926526 | -1.1627247 | -2.8455445 | 4.9559795 | 0.8199406 |

| 0.7890497 | -0.3361745 | 0.2298502 | -0.7290643 | 1.4076452 |

| -0.008949328 | 1.184794406 | -0.242017138 | 0.032201496 | -0.315967880 |

| 3.286787 |

| 0.313871 |

| -3.025002 |

| 1.361306 |

| 0.6500616 |

| 1 |

| 1 |

| 1 |

| 1 |

| 1 |

Next chapter: Probability,Random Vectors, and the Multivariate Normal Distribution