Probability, Random Vectors, and the Multivariate Normal Distribution

Contents

Probability, Random Vectors, and the Multivariate Normal Distribution¶

Probability¶

This course assumes that you have already had an introduction to probability, random variables, expectation, and so on, so the treatment is very cursory. The treatment is quite informal, omitting important technical concepts such as sigma-algebras, measurability, and the like.

Modern probability theory starts with Kolmogorov’s Axioms; here is an informal startement of the axioms. For more (but still a very informal treatment), see these chapters of SticiGui: Probability: Philosophy and Mathematical Background, Set theory, and Probability: Axioms and Fundaments.

Let \(S\) denote the outcome space, the set of all possible outcomes of a random experiment, and let \(\{A_i\}_{i=1}^\infty\) be subsets of \(S\). (Note that here \(A\) denotes a subset, not a matrix.) Then any probability function \({\mathbb P}\) must satisfy these axioms:

For every \(A \subset S\), \({\mathbb P}(A) \ge 0\) (probabilities are nonnegative)

\({\mathbb P}(S) = 1\) (the chance that something happens is 100%)

If \(A_i \cap A_j = \emptyset\) for \(i \ne j\), then \({\mathbb P} \cup_{i=1}^\infty A_i = \sum_{i=1}^\infty {\mathbb P}(A_i)\) (If a countable collection of events is pairwise disjoint, then the chance that any of the events occurs is the sum of the chances that they occur individually.)

These axioms have many useful consequences, among them that \({\mathbb P}(\emptyset) = 0\), \({\mathbb P}(A^c) = 1 - {\mathbb P}(A)\), and \({\mathbb P}(A \cup B) = {\mathbb P}(A) + {\mathbb P}(B) - {\mathbb P}(AB)\).

Definitions¶

Let \(A\) and \(B\) be subsets of outcome space \(S\).

If \(AB = \emptyset\), then \(A\) and \(B\) are mutually exclusive.

If \({\mathbb P}(AB) = {\mathbb P}(A){\mathbb P}(B)\), then \(A\) and \(B\) are independent.

If \({\mathbb P}(B) > 0\), then the conditional probability of \(A\) given \(B\) is \({\mathbb P}(A | B) \equiv {\mathbb P}(AB)/{\mathbb P}(B)\).

Independence is an extremely specific relationship. At one extreme, \(AB = \emptyset\); then \({\mathbb P}(AB) = 0 \le {\mathbb P}(A){\mathbb P}(B)\). At another extreme, either \(A\) is a subset of \(B\) or vice versa; then \({\mathbb P}(AB) = \min({\mathbb P}(A),{\mathbb P}(B)) \ge {\mathbb P}(A){\mathbb P}(B)\). Independence lies at a precise point in between.

Random variables¶

Briefly, a real-valued random variable \(X\) can be characterized by its probability distribution, which specifies (for a suitable collection of subsets of the real line \(\Re\) that comprises a sigma-algebra), the chance that the value of \(X\) will be in each such subset. There are technical requirements regarding measurability, which generally we will ignore. Perhaps the most natural mathematical setting for probability theory involves Lebesgue integration; we will largely ignore the difference between a Riemann integral and a Lebesgue integral.

Let \(P_X\) denote the probability distribution of the random variable \(X\). Then if \(A \subset \Re\), \(P_X(A) = {\mathbb P} \{ X \in A \}\). We write \(X \sim P_X\), pronounced “\(X\) is distributed as \(P_X\)” or “\(X\) has distribution \(P_X\).”

If two random variables \(X\) and \(Y\) have the same distribution, we write \(X \sim Y\) and we say that \(X\) and \(Y\) are identically distributed.

Real-valued random variables can be continuous, discrete, or mixed (general).

Continuous random variables have probability density functions with respect to Lebesgue measure. If \(X\) is a continuous random variables, there is some nonnegative function \(f(x)\), the probability density of \(X\), such that for any (suitable) set \(A \subset \Re\),

Since \({\mathbb P} \{ X \in \Re \} = 1\), it follows that \(\int_{-\infty}^\infty f(x) dx = 1\).

Example. Let \(f(x) = \lambda e^{-\lambda x}\) for \(x \ge 0\), and \(f(x) = 0\) otherwise. Clearly \(f(x) \ge 0\).

Hence, \(\lambda e^{-\lambda x}\) can be the probability density of a continuous random variable. A random variable with this density is said to be exponentially distributed. Exponentially distributed random variables are used to model radioactive decay and the failure of items that do not “fatigue.” For instance, the lifetime of a semiconductor after an initial “burn-in” period is often modeled as an exponentially distributed random variable. It is also a common model for the occurrence of earthquakes (although it does not fit the data well).

Example. Let \(a\) and \(b\) be real numbers with \(a < b\), and let \(f(x) = \frac{1}{b-a}\), \(x \in [a, b]\) and \(f(x)=0\), otherwise. Then \(f(x) \ge 0\) and \(\int_{-\infty}^\infty f(x) dx = \int_a^b \frac{1}{b-a} = 1\), so \(f(x)\) can be the probability density function of a continuous random variable. A random variable with this density is sad to be uniformly distributed on the interval \([a, b]\).

Discrete random variables assign all their probability to some countable set of points \(\{x_i\}_{i=1}^n\), where \(n\) might be infinite. Discrete random variables have probability mass functions. If \(X\) is a discrete random variable, there is a nonnegative function \(p\), the probability mass function of \(X\), such that for any set \(A \subset \Re\),

The value \(p(x_i) = {\mathbb P} \{X = x_i\}\), and \(\sum_{i=1}^\infty p(x_i) = 1\).

Example. Let \(x_i = i-1\) for \(i=1, 2, \ldots\), and let \(p(x_i) = e^{-\lambda} \lambda^{x_i}/x_i!\). Then \(p(x_i) > 0\) and

Hence, \(p(x)\) is the probability mass function of a discrete random variable. A random variable with this probability mass function is said to be Poisson distributed (with parameter \(\lambda\)). Poisson-distributed random variables are often used to model rare events.

Example. Let \(x_i = i\) for \(i=1, \ldots, n\), and let \(p(x_i) = 1/n\) and \(p(x) = 0\), otherwise. Then \(p(x) \ge 0\) and \(\sum_{x_i} p(x_i) = 1\). Hence, \(p(x)\) can be the probability mass function of a discrete random variable. A random variable with this probability mass function is said to be uniformly distributed on \(1, \ldots, n\).

Example. Let \(x_i = i-1\) for \(i=1, \ldots, n+1\), and let \(p(x_i) = {n \choose x_i} p^{x_i} (1-p)^{n-x_i}\), and \(p(x) = 0\) otherwise. Then \(p(x) \ge 0\) and

by the binomial theorem. Hence \(p(x)\) is the probability mass function of a discrete random variable. A random variable with this probability mass function is said to be binomially distributed with parameters \(n\) and \(p\). The number of successes in \(n\) independent trials that each have the same probability \(p\) of success has a binomial distribution with parameters \(n\) and \(p\) For instance, the number of times a fair die lands with 3 spots showing in 10 independent rolls has a binomial distribution with parameters \(n=10\) and \(p = 1/6\).

For general random variables, the chance that \(X\) is in some subset of \(\Re\) cannot be written as a sum or as a Riemann integral; it is more naturally represented as a Lebesgue integral (with respect to a measure other than Lebesgue measure). For example, imagine a random variable \(X\) that has probability \(\alpha\) of being equal to zero; and if \(X\) is not zero, it has a uniform distribution on the interval \([0, 1]\). Such a random variable is neither continuous nor discrete.

Most of the random variables in this class are either discrete or continuous.

If \(X\) is a random variable such that, for some constant \(x_1 \in \Re\), \({\mathbb P}(X = x_1) = 1\), \(X\) is called a constant random variable.

Exercises¶

Show analytically that \(\sum_{x_i} p(x_i) = \sum_{j=0}^n {n \choose j} p^j (1-p)^{n-j} = 1\).

Write an R script that verifies that equation numerically for \(n=10\): for 1000 values of \(p\) equispaced on the interval \((0, 1)\), find the maximum absolute value of the difference between the sum and 1.

Let \( \in (0, 1]\); let \(x_i = 1, 2, \ldots\); and define \(p(x_i) = (1-p)^{x_i-1}p\), and \(p(x) = 0\) otherwise. Show analytically that \(p(x)\) is the probability mass function of a discrete random variable. (A random variable with this probability mass function is said to be geometrically distributed with parameter \(p\).)

Starting from Kolmogorov’s three axioms, show that \({\mathbb P}(A \cup B) = {\mathbb P}(A) + {\mathbb P}(B) - {\mathbb P}(AB)\).

Starting from Kolmogorov’s three axioms, show that \({\mathbb P}(A \cup B \cup C) = {\mathbb P}(A) + {\mathbb P}(B) + {\mathbb P}(C) - {\mathbb P}(AB) - {\mathbb P}(AC) - {\mathbb P}(BC) + {\mathbb P}(ABC)\).

Starting from Kolmogorov’s three axioms, show that \({\mathbb P}(AB) \le \min({\mathbb P}(A),{\mathbb P}(B))\).

Jointly Distributed Random Variables¶

Often we work with more than one random variable at a time. Indeed, much of this course concerns random vectors, the components of which are individual real-valued random variables.

The joint probability distribution of a collection of random variables \(\{X_i\}_{i=1}^n\) gives the probability that the variables simultaneously fall in subsets of their possible values. That is, for every (suitable) subset \( A \in \Re^n\), the joint probability distribution of \(\{X_i\}_{i=1}^n\) gives \({\mathbb P} \{ (X_1, \ldots, X_n) \in A \}\).

An event determined by the random variable \(X\) is an event of the form \(X \in A\), where \(A \subset \Re\).

An event determined by the random variables \(\{X_j\}_{j \in J}\) is an event of the form \((X_j)_{j \in J} \in A\), where \(A \subset \Re^{\#J}\).

Two random variables \(X_1\) and \(X_2\) are independent if every event determined by \(X_1\) is independent of every event determined by \(X_2\). If two random variables are not independent, they are dependent.

A collection of random variables \(\{X_i\}_{i=1}^n\) is independent if every event determined by every subset of those variables is independent of every event determined by any disjoint subset of those variables. If a collection of random variables is not independent, it is dependent.

Loosely speaking, a collection of random variables is independent if learning the values of some of them tells you nothing about the values of the rest of them. If learning the values of some of them tells you anything about the values of the rest of them, the collection is dependent.

For instance, imagine tossing a fair coin twice and rolling a fair die. Let \(X_1\) be the number of times the coin lands heads, and \(X_2\) be the number of spots that show on the die. Then \(X_1\) and \(X_2\) are independent: learning how many times the coin lands heads tells you nothing about what the die did.

On the other hand, let \(X_1\) be the number of times the coin lands heads, and let \(X_2\) be the sum of the number of heads and the number of spots that show on the die. Then \(X_1\) and \(X_2\) are dependent. For instance, if you know the coin landed heads twice, you know that the sum of the number of heads and the number of spots must be at least 3.

Expectation¶

See SticiGui: The Long Run and the Expected Value for an elementary introduction to expectation.

The expectation or expected value of a random variable \(X\), denoted \({\mathbb E}X\), is a probability-weighted average of its possible values. From a frequentist perspective, it is the long-run limit (in probabiity) of the average of its values in repeated experiments. The expected value of a real-valued random variable (when it exists) is a fixed number, not a random value. The expected value depends on the probability distribution of \(X\) but not on any realized value of \(X\). If two random variables have the same probability distribution, they have the same expected value.

Properties of Expectation¶

For any real \(\alpha \in \Re\), if \({\mathbb P} \{X = \alpha\} = 1\), then \({\mathbb E}X = \alpha\): the expected value of a constant random variable is that constant.

For any real \(\alpha \in \Re\), \({\mathbb E}(\alpha X) = \alpha {\mathbb E}X\): scalar homogeneity.

If \(X\) and \(Y\) are random variables, \({\mathbb E}(X+Y) = {\mathbb E}X + {\mathbb E}Y\): additivity.

Calculating Expectation¶

If \(X\) is a continuous real-valued random variable with density \(f(x)\), then the expected value of \(X\) is

provided the integral exists.

Example. Suppose \(X\) has density \(f(x) = \frac{1}{b-a}\) for \(a \le x \le b\) and \(0\) otherwise. Then \( {\mathbb E}X = \int_{-\infty}^\infty x f(x) dx = \frac{1}{b-a} \int_a^b x dx = \frac{b^2-a^2}{2(b-a)} = \frac{a+b}{2}\).

If \(X\) is a discrete real-valued random variable with probability function \(p\), then the expected value of \(X\) is

where \(\{x_i\} = \{ x \in \Re: p(x) > 0\}\), provided the sum exists.

Example. Suppose \(X\) has a Poisson distribution with parameter \(\lambda\). Then \({\mathbb E}X = e^{-\lambda} \sum_{j=0}^\infty j \lambda^j/j! = \lambda\).

Variance, Standard Error, and Covariance¶

See SticiGui: Standard Error for an elementary introduction to variance and standard error.

The variance of a random variable \(X\) is \(\mbox{Var }X = {\mathbb E}(X - {\mathbb E}X)^2\).

Algebraically, the following identity holds:

However, this is generally not a good way to calculate \(\mbox{Var} X\) numerically, because of roundoff: it sacrifices precision unnecessarily.

The standard error of a random variable \(X\) is \(\mbox{SE }X = \sqrt{\mbox{Var } X}\).

If \(\{X_i\}_{i=1}^n\) are independent, then \(\mbox{Var} \sum_{i=1}^n X_i = \sum_{i=1}^n \mbox{Var }X_i\).

If \(X\) and \(Y\) have a joint distribution, then \(\mbox{cov} (X,Y) = {\mathbb E} (X - {\mathbb E}X)(Y - {\mathbb E}Y)\). It follows from this definition (and the commutativity of multiplication) that \(\mbox{cov}(X,Y) = \mbox{cov}(Y,X)\). Also,

If \(X\) and \(Y\) are independent, \(\mbox{cov }(X,Y) = 0\). However, the converse is not necessarily true: \(\mbox{cov}(X,Y) = 0\) does not in general imply that \(X\) and \(Y\) are independent.

Random Vectors¶

Suppose \(\{X_i\}_{i=1}^n\) are jointly distributed random variables, and let

Then \(X\) is a random vector, a \(n\) by \(1\) vector of real-valued random variables.

The expected value of \(X\) is

The covariance matrix of \(X\) is

Covariance matrices are always positive semidefinite.

The Multivariate Normal Distribution¶

The notation \(X \sim {\mathcal N}(\mu, \sigma^2)\) means that \(X\) has a normal distribution with mean \(\mu\) and variance \(\sigma^2\). This distribution is continuous, with probability density function

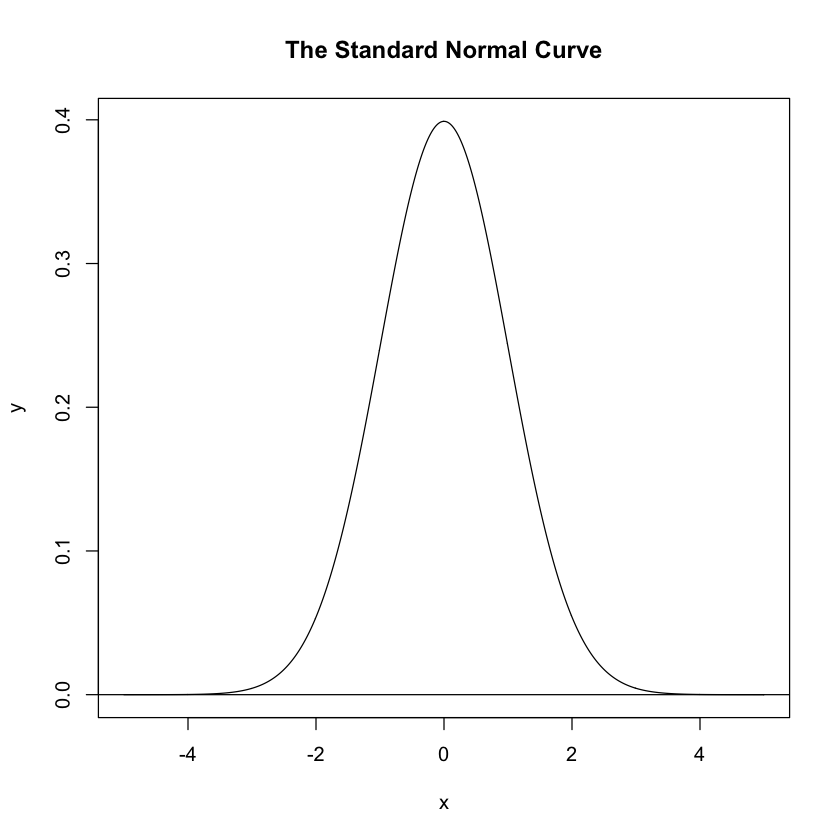

If \(X \sim {\mathcal N}(\mu, \sigma^2)\), then \(\frac{X-\mu}{\sigma} \sim {\mathcal N}(0, 1)\), the standard normal distribution. The probability density function of the standard normal distribution is

## Plot the normal density

x <- seq(from = -5, to = 5, by=0.01);

y <- (2*pi)^(-1/2)*exp(-x^2/2);

plot(x,y, type = "n") +

abline(h = 0) +

lines(x,y) +

title(main = "The Standard Normal Curve")

A collection of random variables \(\{ X_1, X_2, \ldots, X_n\} = \{X_j\}_{j=1}^n\) is jointly normal if all linear combinations of those variables have normal distributions. That is, the collection is jointly normal if for all \(\alpha \in \Re^n\), \(\sum_{j=1}^n \alpha_j X_j\) has a normal distribution.

If \(\{X_j \}_{j=1}^n\) are independent, normally distributed random variables, they are jointly normal.

If for some \(\mu \in \Re^n\) and positive-definite matrix \(G\), the joint density of \(\{X_j \}_{j=1}^n\) is

then \(\{X_j \}_{j=1}^n\) are jointly normal, and the covariance matrix of \(\{X_j\}_{j=1}^n\) is \(G\).

Example¶

[TO DO!]

The Central Limit Theorem¶

For an elementary discussion, see SticiGui: The Normal Curve, The Central Limit Theorem, and Markov’s and Chebychev’s Inequalities for Random Variables.

Suppose \(\{X_j \}_{j=1}^\infty\) are independent and identically distributed (iid), have finite expected value \({\mathbb E}X_j = \mu\), and have finite variance \(\mbox{var }X_j = \sigma^2\).

Define the sum \(S_n \equiv \sum_{j=1}^n X_j\). Then

and

(The last step follows from the independence of \(\{X_j\}\): the variance of the sum is the sum of the variances.)

Define \(Z_n \equiv \frac{S_n - n\mu}{\sqrt{n}\sigma}\). Then for every \(a, b \in \Re\) with \(a \le b\),

This is a basic form of the Central Limit Theorem.

Probability Inequalities and Identities¶

This follows Lugosi (2006) rather closely; it’s also a repeat of the results in the chapter on Mathematical Preliminaries, which we largely omitted.

The tail-integral formula for expectation¶

If \(X\) is a nonnegative real-valued random variable,

Jensen’s Inequality¶

If \(\phi\) is a convex function from \({\mathcal X}\) to \(\Re\), then \(\phi({\mathbb E} X) \le {\mathbb E} \phi(X)\).

The Chernoff Bound¶

Apply the Generalized Markov Inequality with \(f(x) = e^{sx}\) for positive \(s\) to obtain the Chernoff Bound:

for all \(s\). For particular \(X\), one can optimize over \(s\).

Hoeffding’s Inequality¶

Let \(\{ X_j \}_{j=1}^n\) be independent, and define \(S_n \equiv \sum_{j=1}^n X_j\). Applying the Chernoff Bound yields

Hoeffding bounds the moment generating function for a bounded random variable \(X\): If \(a \le X \le b\) with probability 1, then

from which follows Hoeffding’s tail bound.

If \(\{X_j\}_{j=1}^n\) are independent and \({\mathbb P} \{a_j \le X_j \le b_j\} = 1\), then

and

Hoeffding’s Other Inequality¶

Suppose \(f\) is a convex, real function and \({\mathcal X}\) is a finite set. Let \(\{X_j \}_{j=1}^n\) be a simple random sample from \({\mathcal X}\) and let \(\{Y_j \}_{j=1}^n\) be an iid uniform random sample (with replacement) from \({\mathcal X}\). Then

Bernstein’s Inequality¶

Suppose \(\{X_j \}_{j=1}^n\) are independent with \({\mathbb E} X_j = 0\) for all \(j\), \({\mathbb P} \{ | X_j | \le c\} = 1\), \(\sigma_j^2 = {\mathbb E} X_j^2\) and \(\sigma = \frac{1}{n} \sum_{j=1}^n \sigma_j^2\). Then for any \(\epsilon > 0\),

Next chapter: Inference and Statistical Models